NextGen Network:

How AI can work for humanity

The NextGen Network:

What should the future look like? We must decide.

Imagine a future plagued by challenges that ignore national borders — inequality, environmental destruction, and unemployment. There is also a future where people engage across borders, using new technology to address social, health, environmental, and economic challenges. Tools like artificial intelligence could enable people to benefit from an inclusive economy, sustainable community, and access to political processes.

What should the future look like? We must decide. This shared belief in agency and collective action led the Aspen Institute’s International Partners and Microsoft to create the NextGen Network. The NextGen Network brings together young leaders to develop an understanding of how new technologies, like artificial intelligence, will affect their lives and societies. With over 130 young people, the Network includes leaders from technology, business, policy, journalism and civil society in Mexico, Germany, Central Europe, India, France, and the United Kingdom.

As younger generations take the lead, it will be critical to ensure their ideas are represented in discussions and decisions about the role of technology in society. The Network provides a platform to exchange ideas about governing technology, and amplifies recommendations to those leading now. By sharing knowledge across sectors and generations, the Network seeks solutions to deliver ethical AI and address challenges that communities face across the world.

The impact of COVID-19 globally stresses the unprecedented challenges we face and emphasizes how important young leaders' perspectives are to leverage new technologies for an equitable and just future. We hope for the NextGen Network to continue shaping the conversation around technology and society, and deepen an intergenerational dialogue on the pressing issues of our time.

COVID-19 & Artificial Intelligence

BY CLAUDIA MAY DEL POZO

AI is central to improving our monitoring and experience in the pandemic

but we must be careful about over-hyping the technology’s potential.

Just when we thought humanity was unstoppable, a global pandemic reminds us we are not supernatural and still remain subject to the whimsicality of Mother Earth. Yet, our ability as humans to continually grow our capabilities has provided us with a new ally in this fight against one of the deadliest pandemics our world has seen: Artificial Intelligence (AI). AI is central to improving our monitoring and experience in the pandemic, but we must be careful about over-hyping the technology’s potential. Making the most of AI during this pandemic requires hedging its advantages with a realistic understanding of its limitations. While AI cannot predict rare and huge events, it can help us manage them at a granular level. AI is helping us respond to the crisis in a myriad of ways. At a societal level, governments across the world are turning to Machine Learning to understand the spread of COVID-19, enabling precise resource allocation. Individually, AI enables tracking systems and apps to help us stay physically and mentally healthy. AI is also the reason we can hope to develop a vaccine soon, thanks to its simulation capabilities and ability to accelerate research processes. While tech proponents might celebrate the accelerated adoption of AI at institutional and individual levels due to the pandemic, it is important to realize AI is a young field and has yet to prove a trustable technology, especially when lives are at stake. Without due diligence and cross-sector processes, AI could create more harm than good in the long-term by introducing unintended consequences and systemic bias. We do not have the luxury of getting our priorities wrong any longer. The question is not about adopting AI or not -- it is about how we ensure a responsible and trustworthy adoption of AI. If every cloud has a silver lining, the pandemic might usher in a new era of human-centered AI.

Claudia Del Pozo

NextGen Network Member; Head of Operations, C Minds

Key Takeaways from the NextGen Network:

AI should be human-centered, with transparency and accountability as paramount features.

Overwhelmingly, AI will disrupt labor markets and the economy around the world.

AI will help NextGen members’ careers and countries.

Governments must lead in addressing the challenges of AI with tech companies and international organizations.

The impact of AI on medicine and health and sustainability will be positive, yet will negatively impact income inequality.

SETTING THE SCENE

From Telegraph Lines to Lines of Code

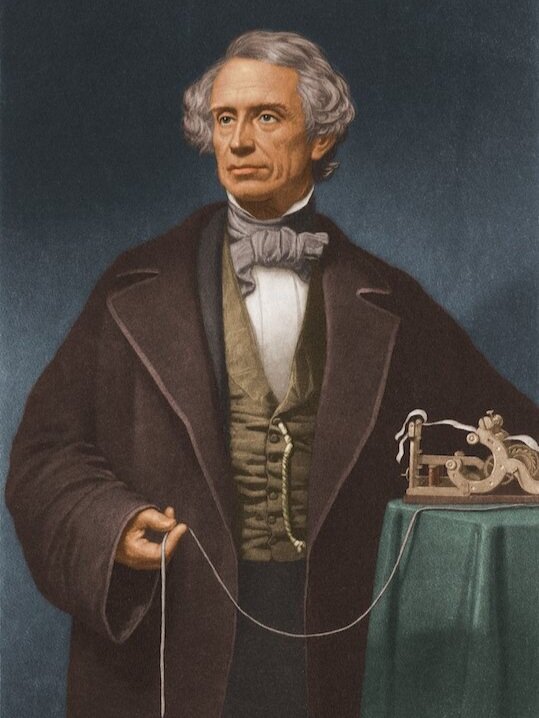

“What God hath wrought.”

The message sent by Samuel Morse in 1844 from a room deep in the United States Capitol

In May 1844, American inventor Samuel Morse transmitted the first message across 44 kilometers of electric telegraph line connecting Washington, DC, to Baltimore. The initial transmission, suggested to him by a friend’s young daughter, was the biblical verse “What God hath wrought.”[i] The message, sent from a room deep in the United States Capitol, which bears a plaque commemorating the world’s first electronic communication, captured the sense of wonder, a realization of the transformative power that this foundational general-purpose technology could bring for a young industrial age. Before long, telegraph lines pinging Morse code grafted nations together, enabled new supply chains, and revolutionized warfare. Almost one hundred years later in 1946, that same Capitol room became home to the Joint Committee on Atomic Energy, the governing body for the harnessing of nuclear power for peace and war[ii]. It was a new age — the atomic age— borne on the back of another general-purpose technology freighted with potential, empowerment, emancipation, and frightening destruction all in one.

Today, artificial intelligence (AI) is poised to join the telegraph and nuclear power as a hinge technology defining yet another age — the deep digital age. This algorithmic secret sauce will enable new forms of medical screening and patient treatment; safer, self-driving vehicles; easier, more natural man-machine communication; more efficient logistics; better farming techniques and crop yields; and faster decision making in everything from insurance to banking, policing, and national security.

Yet, AI is also putting new strains on the governing architecture everywhere, confronting societies with ethical, economic, and political differences that need to be reconciled. Across the world, governments, tech companies, the Organization for Economic Co-operation and Development, the European Union, NATO, the Chinese Communist Party, and even the Vatican have been grappling with the ethical implications of this almost promethean force that could arise from deep learning, Bayesian models, and generative adversarial networks. What God hath wrought, indeed.

A decade after that first telegraph message, Henry David Thoreau warned in “Walden” about the threat that “men have become the tools of their tools.”[iii] The changing relationship between man and machine was a central anxiety of early modernity. Today, Israeli historian Yuval Noah Harari asks in “Homo Deus” whether the modern covenant is an exchange of meaning for power — the more power we get, the less meaning exists in our affairs[iv]. His assertion gets at one of AI’s core ethical conundrums — namely, the ability to centralize and process data for the purpose of analysis and action that, in some instances, could limit personal autonomy, agency, and dignity. In essence, AI’s massive enabling potential could give people more tailored entertainment, faster production lines, more accurate health care treatment, and better matchmaking. At the same time, it could leave people unmoored from the identity and self-worth they derive as they determine how they work, play, and build relationships. How can humanity maintain personal autonomy and agency in a world where decision making, itself, is increasingly automated?

This loss of agency poses other ethical conundrums as well. The danger exists that AI will turbocharge some of society’s most pernicious structural inequalities. AI processes often automate biases like racism, sexism, religious prejudice, and homophobia. AI also can harden power asymmetries. If users — as workers, citizens, consumers, governments — cede so much agency to AI algorithms, it begs the following question: Who controls the algorithms? Authoritarian governments that use AI to surveil their citizens and suppress opposition? Companies that, at times, use AI to buck antitrust law, lock in market dominance, and reduce the leverage of people as individuals? Are we building in the mechanisms to absorb AI’s disruptions, distribute its gains, and preserve political legitimacy and personal self-worth?

[i] Library of Congress. First telegraphic message---24 May 1844.

[ii] Architect of the Capitol. Old Supreme Court Chamber.

[iii] Thoreau, Henry David. Walden, and On the Duty of Civil Disobedience.

[iv] Harari, Yuval Noah. Homo Deus: A brief history of tomorrow. (Random House, 2016).

Data and Its Discontents

Human dignity is inviolable. To respect and protect it shall be the duty of all state authority.

Article 1 of the German Constitution

We certainly still can build these mechanisms to take advantage of AI’s benefits and protect against its challenges, but the window to respond is closing. AI law is still in its infancy. It is essentially at the point of online data privacy law in 1995 — before a series of laws like the EU Data Protection Directive (1995), HIPAA (1996), and Gramm-Leach-Bliley (1999) began setting a somewhat uneven rules landscape for data protection in the digital world. Many would argue that today’s internet ethos is a result of not taking the chance to create a legal and moral structure online more seriously. What lessons can we learn from the early internet age to think about undergirding AI in a way that is built to last? How should that legal framework look to match our values? How can we align and coordinate regulatory models in areas like privacy, competition and fairness to make sure they are reflected in AI governance?

Take issues related to data ethics, one of the direct antecedents to AI ethics. Across the world, data regulation varies distinctly by country, even between liberal democracies. For example, Germany’s approach to personal data protection is based on the Kantian conception of human dignity. The German social contract enshrines this notion of ethics in Article 1 of the German Constitution: “Human dignity is inviolable. To respect and protect it shall be the duty of all state authority.”[i] That first principle serves as the ethical basis for German and European approaches on everything from privacy, like the EU’s General Data Protection Regulation (GDPR), to policy regarding the future of work.

The notion is closely aligned with — but distinct from— the Anglo-American notions of self-actualization. Think about the “pursuit of happiness,” a notion based on the human doing rather than the human being. Perhaps that is one reason why many data protection laws in the US are based on human activities — taking care of your health or managing your finances. These shades of difference have led to some titan battles in the world of tech across the world.

But these differences in liberal democracies remain firmly rooted in the centrality of the individual. That creates a number of conflict points with, for example, the Chinese government, which approaches data governance in a way centered on the notion of social harmony[ii]. These comparative differences have real-world impacts when considering AI research and development, automated decision making, human-in-the-loop authorization and consent, and data usage and safety. Ultimately, it impacts our relationship with technology and power.

What’s more – AI’s concentration in a small number of state actors could have under-examined implications for the Global South. Will they become “AI takers” to new data colonial empires from Silicon Valley and Beijing? What could it mean for countries in Latin America, Africa and Asia if critical media, behavior nudging and disinformation – all of which play important roles in democratic integrity at home – are increasingly operated and regulated abroad? Alternatively, could a lax regulatory environment in developing countries lead to testing laboratories for AI, and what positive and/or negative effects would this have?

How Millennials Perceive AI Ethics

Discussing what ethical AI should look like in the 21st Century.

The Aspen Institute - Germany

The NextGen project engages talented young leaders (ages 18-35), across the international Aspen community sitting at the cusp of political, economic, and moral leadership and asks them what ethical AI should look like in the 21st century. A rising generation is bringing different value hierarchies to the world it wants to see. Values like sustainability and social justice are, in many cases, taking a more prominent place.

The workshops held in 2018 and 2019 during the first phase of the NextGen Network combined theoretical explorations of the most important guiding principles — like the centrality of social cohesion, human dignity, safety, productivity, competitiveness, fairness, transparency, and accountability — with practical discussions about public policy. They drew out similarities and differences across India, Mexico, Germany, France, the United Kingdom, Central Europe, and beyond, mapping different cultural, political, and economic conditions: corruption in Mexico; health care in Germany; entrepreneurship in France; education in India, security in Central Europe, inclusion and social justice in the UK.

This project did not seek to find answers to the big questions. Rather, it followed the breadcrumbs through 6 cities, Mexico City, Berlin, Prague, New Delhi, Paris, and London, to see how AI ethics can ultimately be translated into legal, social, and political conditions that can make life more meaningful. The stakes couldn’t be greater. What follows are some key findings.

by Tyson Barker

Program Director and Fellow at The Aspen Institute Germany

Ideas from the NexGen Network

How Should We Use and Govern AI?

In discussions with over 130 young people across the Network, it was undisputed that AI will have profound impacts on society and that little has been done to assuage fears of this new technology. Although the topics varied from one workshop to another, participants felt it was unequivocal that AI algorithms should be transparent and checked for biases, and the real risks and opportunities communicated to the public.

From Mexico, Germany, and Central Europe to India, France, and the UK, distinct guiding principles and recommendations emerged on how AI should be used, governed, and incorporated into national strategies, as well as on how countries, the international community, companies, and individuals should distinctly respond to and leverage nascent technologies like AI. Without ethical governance of AI, it cannot be truly dependable and steeped into every facet of daily life.

How Should AI Be Used?

AI can increase access to education.

One of the most powerful tools to reduce poverty and inequality.

Discourse around AI unearths the uncertainty and fear many feel because of the threat AI poses to jobs, privacy, and security. AI can make one simultaneously enthusiastic and fearful about how the technology could improve and destroy jobs.Despite the pertinent concerns, technology like AI also has the possibility to deliver more effective and efficient services to citizens if implemented correctly.

AI can help further social goals in countries like Mexico by improving financial inclusion, fighting corruption, and reducing crime. In Central Europe, AI can enable people from different backgrounds to work together without a common language or locale. In Germany, France, India, and the UK, with secure data protection, there is untapped potential for AI to improve national health care services and the delivery of speedy and personalized treatment. The six distinct NextGen workshops underscored that the adoption of AI could help tackle major challenges like resilient infrastructure, autonomy and high costs for national security systems, and sustainable food systems, but only if adopted in a responsible way.

That AI will destroy jobs is not predetermined; in fact, AI could generate more jobs than will be lost. Given the uncertain impact of AI on employment, young people should ensure they are prepared with the skills to match jobs in the future, not just the jobs available today.

AI can increase access to education, one of the most powerful tools to reduce poverty and inequality, by offering personalized learning and teaching materials at low costs. Across NextGen countries, basic instruction about AI in primary and secondary education would increase awareness about AI starting at a young age and identify ways for people to use AI for everyday life. Developing critical thinking skills towards what AI recommends is necessary to maintain the user’s agency and empower communities, instead of allowing service providers and tech companies to decide.

Outside of traditional education, training and skill-building programs can help cultivate talent and prepare citizens for employment in the modern economy. However, education and training are not a panacea for larger structural changes — development of soft skills like communication and collaboration are equally essential to prepare for an ambiguous future workforce.

The quality of jobs in the future is also in question. However, uncertainty about AI’s impact on jobs provides an opportunity to redesign tasks and reimagine how to instill dignity in work. Learning from history, countries like France could avoid the alienation and inequality that many workers experienced from the Industrial Revolution with intentional policies for AI to improve current jobs. Incorporating feedback from employees and organizations, such as unions, as well as those most impacted by AI could limit disruption to people’s livelihoods. For countries like India, the benefits of AI for the workforce could be realized by automating repetitive activities and creating frameworks for humans and machines to collaborate on tasks. If countries wish to effectively train and educate people for future, high-quality jobs, research and mapping are essential to determine where AI can support existing professions, create new ones, and streamline the education system.

How Should AI Be Governed?

The challenge of balancing the benefits of AI

with fears about its repercussions is not limited to one country alone.

Throughout history, people have adversely reacted to the introduction of new technology, from books and the telephone to cars and the personal computer. While new technologies like AI promise to improve efficiency and competitiveness for countries, citizens, and companies, the negative impacts on society should be minimized through governance. Many participants underscored that regulation and testing are essential to fully understand an AI’s implications before it is rolled out in society. This policy would be harder to implement than to demand from companies and governments. For Germany, quickly introducing AI should require accompanying oversight of the technology by companies and government. Yet, cautiously introducing AI is not always a priority. Central Europe and Mexico, for example, prefer the quick application and adoption of AI in order to be competitive with technology leaders like the US and China.

The challenge of balancing the benefits of AI with fears about its repercussions is not limited to one country alone. All governments would benefit by examining and accurately conveying how AI will change the delivery of services for citizens, and not just as a technology that will disrupt daily life. By dispelling misconceptions and gathering feedback, governments could more successfully adopt AI, and citizens could ensure that the decision-making process is transparent and protects against unethical practices and biases.

Regulation in the EU has been largely successful due to the existing trust in public institutions. Because of this, novel quasi-governmental institutions, as suggested by Germany and Central Europe, could help vet new AI technologies and create policies with companies to ensure the technologies align with core European values. These policies could then be transposed into the national legislation of EU Member States, which would support consistency and data protection.

An EU-wide labeling system, similar to ecological standards for household appliances or organic foods, could inform consumers about the technology’s creation, contents, and capabilities — including how consumers’ data would be used. Actions such as these would help the EU become a leader to mitigate ambivalence surrounding AI, given that it has some of the world’s highest consumer protection regulations. This idea, however, would not necessarily be applicable in countries without sufficient consumer protections or trust in governmental institutions.

Participants proposed independent advisory boards as a mechanism to include voices from government, technology, and non-governmental institutions. For the UK, these boards could play a key role in shaping decisions on best practices and systems to regulate new technologies. Similarly, in France, incorporating feedback from groups adversely affected by automation, disenfranchisement, and inequality could inform the development of AI to ensure that technological innovation is tied closely to social impact and mitigates the negative disruptions to society.

Establishing rules to design and develop AI are important, but participants in Central Europe recommended the region avoid over-regulation to prevent stifling innovation and competitiveness. The only country for which participants suggested governing AI through an international lens was India, proposing that the Universal Declaration of Human Rights be an anchor to address governance of ethical AI, given the socioeconomic challenges unique to the Indian context. It could be that each country develops its own AI systems with little regulation, or where countries export AI technologies to others in a fair and ethical way. From these discussions, it was apparent that an international solution to govern and regulate AI would not be politically feasible, or necessarily desired, for all the NextGen countries.

What Should A National Strategy on AI Look Like?

A commitment to international dialogue,

public debate of ethical issues, and data privacy amid global AI competition.

Mexico was the first country in Latin America, and one of the first 10 countries in the world, to launch an AI strategy, although the adoption of AI has not been as swift as in other NextGen countries. It is essential for adoption of AI in Mexico that industry and civil society communicate AI’s impact to the government. especially across changing administrations. In addition, the private sector and government must coordinate efforts, resources, and time toward new strategies that have a broader impact on society.

Despite the focus on German competitiveness in the government’s recent AI strategy, a holistic European solution would better position Germany to play to its strengths — such as a commitment to international dialogue, public debate of ethical issues, and data privacy — amid global AI competition. This could make Germany and Europe key players in forging a socially conscious path for future AI applications that could compete with more individualistic, market-driven efforts spearheaded by the US, or the capital-intensive, state-aligned efforts of China. EU member states are working to leverage the bloc’s competitive advantages; the European Commission has pushed an EU-level agenda to cooperate on AI, including a high-level expert group, and work with the OECD and UNESCO on a global framework for AI ethics. Regulations within the EU in the coming years will reveal how much of AI is strategic and nation-specific, and how much is transnational.

Participants in Central Europe recommended the EU help introduce a roadmap for European companies in Central Europe to adopt and use AI that protects the principles of society, such as privacy, and leaves space for AI improvement and technological development. Similarly, as the biggest adopter of AI, the Indian government would benefit from a clear framework to identify responsible AI and where it can augment human capacity. With a national strategy, governments would be able to proactively address critical issues on AI related to ethics and bias, and ensure that users can confidently and transparently use AI.

As for the UK, the government should focus national efforts on ownership of data and economic redistribution programs to give citizens a share of tech companies’ success. Like its northern neighbor, France’s AI strategy is also very national; however, without a continent-wide focus, it will be difficult to use and implement large data sets for AI. In the ambiguous future of an EU without the UK, France could benefit from the return of many London-based startups, which could also lead to fragmentation between the two countries. Paris has begun competing with European cities like Amsterdam, Frankfurt, and Berlin – all vying to be the next hub for AI innovation.

Guiding Principles and Recommendations

The discussions across the Network underscored how the NextGen countries differ in values and guiding principles. There was a consensus that companies should create an internal “AI ethics officer” to oversee the ethical and transparent implementation of AI, which would encourage companies to take responsibility for assessing their biases and develop their own ethics protocols. In addition, participants from each NextGen country voiced widespread concern about how data is being collected, stored, and used. It is essential that steps be taken with governments and companies to ensure that data collection is secure and does not violate consumers’ rights or agency.

Core Values to Guide AI Technology

Core values and principles

to guide adoption and development of ethical AI.

Each NextGen country underscored its distinct core values and principles to guide adoption and development of ethical AI. It is clear that an international ethics system would be virtually impossible, given the challenges of nation-specific ethics and values.

Mexico

Government, civil society, the private sector, and the customers and end users of technology have a shared responsibility to regulate and govern AI through a multi-stakeholder approach.

Germany

Regulation and development of AI should be done within established value frameworks, instead of reinventing the wheel. Human dignity, trust and transparency, user control and agency over data, and convenience of technological application should guide AI policymaking.

Central Europe

Explainability, transparency, inclusivity, and diversity should be instilled in each AI technology. People need to have the final say over AI and help steer AI toward appropriate, ethical decision making.

India

Privacy, inclusivity of access, autonomy, agency, and the right to choose are sacrosanct. AI should be used to promote more inclusive growth and include marginalized individuals into the mainstream.

France

The right to organize and defend one’s rights, transparency around decision making and algorithm bias, and freedom to innovate and move should guide AI development and regulation. It is essential for people to find work they can be proud of and take ownership over, even as AI risks increasing the distance between work and impact.

United Kingdom

Economic benefits of new technology should be redistributed throughout society. Transparency and accountability of data and algorithms behind AI and inclusivity of people from diverse backgrounds to check AI for biases should be employed to ensure companies use AI responsibly.

by Calli Obern

Senior Program Manager, International Partners - The Aspen Institute

Contact Information

For questions about the NextGen Network, please reach out to the International Partners team at internationalpartners@aspeninstitute.org

NEXTGEN MEMBER INSIGHTS

How should AI be used to combat COVID-19

With a heartfelt understanding of how it will also affect values such as privacy and data protection

To report information to people more effectively

To track the spread and symptoms, and to help support those in isolation.

To combine insights from medical research around the world.

Tracking, diagnosis and fighting disinformation.

Track symptoms and infected people, and model possible vaccines.

Simulations to assess different scenarios and outcomes of Covid-19 response measures, as well as support in developing a vaccine and Covid-19 medication

To understand infection pathways and evaluate existing studies

Simulations to assess potential consequences under different conditions

Bots for telemedicine

To analyze health data to create vaccines.

This is the first report from the NextGen Network, outlining perspectives from the next generation around how artificial intelligence could address major societal challenges and reduce the technology’s negative impacts. Conversations with members across the Network, including a survey, informed how this technology should be developed and deployed.

We hope these perspectives can advance the conversation around using technology for good and ensuring current and future generations can benefit from the potential offered by AI.